forked from mindspore-Ecosystem/mindspore

Implements of masked seq2seq pre-training for language generation.

This commit is contained in:

parent

24be3f82ad

commit

065f32e0e5

|

|

@ -0,0 +1,592 @@

|

|||

|

||||

|

||||

<!-- TOC -->

|

||||

|

||||

- [MASS: Masked Sequence to Sequence Pre-training for Language Generation Description](#googlenet-description)

|

||||

- [Model architecture](#model-architecture)

|

||||

- [Dataset](#dataset)

|

||||

- [Features](#features)

|

||||

- [Script description](#script-description)

|

||||

- [Data Preparation](#Data-Preparation)

|

||||

- [Tokenization](#Tokenization)

|

||||

- [Byte Pair Encoding](#Byte-Pair-Encoding)

|

||||

- [Build Vocabulary](#Build-Vocabulary)

|

||||

- [Generate Dataset](#Generate-Dataset)

|

||||

- [News Crawl Corpus](#News-Crawl-Corpus)

|

||||

- [Gigaword Corpus](#Gigaword-Corpus)

|

||||

- [Cornell Movie Dialog Corpus](#Cornell-Movie-Dialog-Corpus)

|

||||

- [Configuration](#Configuration)

|

||||

- [Training & Evaluation process](#Training-&-Evaluation-process)

|

||||

- [Weights average](#Weights-average)

|

||||

- [Learning rate scheduler](#Learning-rate-scheduler)

|

||||

- [Model description](#model-description)

|

||||

- [Performance](#performance)

|

||||

- [Results](#results)

|

||||

- [Training Performance](#training-performance)

|

||||

- [Inference Performance](#inference-performance)

|

||||

- [Environment Requirements](#environment-requirements)

|

||||

- [Platform](#Platform)

|

||||

- [Requirements](#Requirements)

|

||||

- [Get started](#get-started)

|

||||

- [Pre-training](#Pre-training)

|

||||

- [Fine-tuning](#Fine-tuning)

|

||||

- [Inference](#Inference)

|

||||

- [Description of random situation](#description-of-random-situation)

|

||||

- [others](#others)

|

||||

- [ModelZoo Homepage](#modelzoo-homepage)

|

||||

|

||||

<!-- /TOC -->

|

||||

|

||||

|

||||

# MASS: Masked Sequence to Sequence Pre-training for Language Generation Description

|

||||

|

||||

[MASS: Masked Sequence to Sequence Pre-training for Language Generation](https://www.microsoft.com/en-us/research/uploads/prod/2019/06/MASS-paper-updated-002.pdf) was released by MicroSoft in June 2019.

|

||||

|

||||

BERT(Devlin et al., 2018) have achieved SOTA in natural language understanding area by pre-training the encoder part of Transformer(Vaswani et al., 2017) with masked rich-resource text. Likewise, GPT(Raddford et al., 2018) pre-trains the decoder part of Transformer with masked(encoder inputs are masked) rich-resource text. Both of them build a robust language model by pre-training with masked rich-resource text.

|

||||

|

||||

Inspired by BERT, GPT and other language models, MicroSoft addressed [MASS: Masked Sequence to Sequence Pre-training for Language Generation](https://www.microsoft.com/en-us/research/uploads/prod/2019/06/MASS-paper-updated-002.pdf) which combines BERT's and GPT's idea. MASS has an important parameter k, which controls the masked fragment length. BERT and GPT are specicl case when k equals to 1 and sentence length.

|

||||

|

||||

[Introducing MASS – A pre-training method that outperforms BERT and GPT in sequence to sequence language generation tasks](https://www.microsoft.com/en-us/research/blog/introducing-mass-a-pre-training-method-that-outperforms-bert-and-gpt-in-sequence-to-sequence-language-generation-tasks/)

|

||||

|

||||

[Paper](https://www.microsoft.com/en-us/research/uploads/prod/2019/06/MASS-paper-updated-002.pdf): Song, Kaitao, Xu Tan, Tao Qin, Jianfeng Lu and Tie-Yan Liu. “MASS: Masked Sequence to Sequence Pre-training for Language Generation.” ICML (2019).

|

||||

|

||||

|

||||

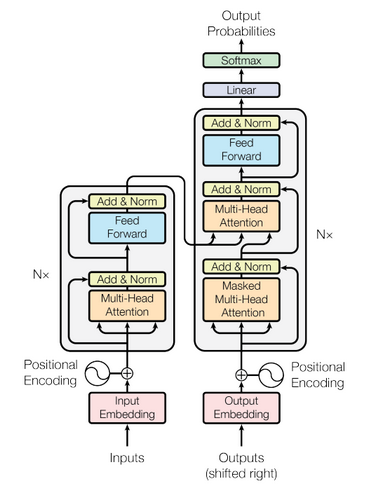

# Model architecture

|

||||

|

||||

The overall network architecture of MASS is shown below, which is Transformer(Vaswani et al., 2017):

|

||||

|

||||

MASS is consisted of 6-layer encoder and 6-layer decoder with 1024 embedding/hidden size, and 4096 intermediate size between feed forward network which has two full connection layers.

|

||||

|

||||

|

||||

|

||||

|

||||

# Dataset

|

||||

|

||||

Dataset used:

|

||||

- monolingual English data from News Crawl dataset(WMT 2019) for pre-training.

|

||||

- Gigaword Corpus(Graff et al., 2003) for Text Summarization.

|

||||

- Cornell movie dialog corpus(DanescuNiculescu-Mizil & Lee, 2011).

|

||||

|

||||

Details about those dataset could be found in [MASS: Masked Sequence to Sequence Pre-training for Language Generation](https://www.microsoft.com/en-us/research/uploads/prod/2019/06/MASS-paper-updated-002.pdf).

|

||||

|

||||

|

||||

# Features

|

||||

|

||||

Mass is designed to jointly pre train encoder and decoder to complete the task of language generation.

|

||||

First of all, through a sequence to sequence framework, mass only predicts the blocked token, which forces the encoder to understand the meaning of the unshielded token, and encourages the decoder to extract useful information from the encoder.

|

||||

Secondly, by predicting the continuous token of the decoder, the decoder can build better language modeling ability than only predicting discrete token.

|

||||

Third, by further shielding the input token of the decoder which is not shielded in the encoder, the decoder is encouraged to extract more useful information from the encoder side, rather than using the rich information in the previous token.

|

||||

|

||||

|

||||

# Script description

|

||||

|

||||

MASS script and code structure are as follow:

|

||||

|

||||

```text

|

||||

├── mass

|

||||

├── README.md // Introduction of MASS model.

|

||||

├── config

|

||||

│ ├──config.py // Configuration instance definition.

|

||||

│ ├──config.json // Configuration file.

|

||||

├── src

|

||||

│ ├──dataset

|

||||

│ ├──bi_data_loader.py // Dataset loader for fine-tune or inferring.

|

||||

│ ├──mono_data_loader.py // Dataset loader for pre-training.

|

||||

│ ├──language_model

|

||||

│ ├──noise_channel_language_model.p // Noisy channel language model for dataset generation.

|

||||

│ ├──mass_language_model.py // MASS language model according to MASS paper.

|

||||

│ ├──loose_masked_language_model.py // MASS language model according to MASS released code.

|

||||

│ ├──masked_language_model.py // Masked language model according to MASS paper.

|

||||

│ ├──transformer

|

||||

│ ├──create_attn_mask.py // Generate mask matrix to remove padding positions.

|

||||

│ ├──transformer.py // Transformer model architecture.

|

||||

│ ├──encoder.py // Transformer encoder component.

|

||||

│ ├──decoder.py // Transformer decoder component.

|

||||

│ ├──self_attention.py // Self-Attention block component.

|

||||

│ ├──multi_head_attention.py // Multi-Head Self-Attention component.

|

||||

│ ├──embedding.py // Embedding component.

|

||||

│ ├──positional_embedding.py // Positional embedding component.

|

||||

│ ├──feed_forward_network.py // Feed forward network.

|

||||

│ ├──residual_conn.py // Residual block.

|

||||

│ ├──beam_search.py // Beam search decoder for inferring.

|

||||

│ ├──transformer_for_infer.py // Use Transformer to infer.

|

||||

│ ├──transformer_for_train.py // Use Transformer to train.

|

||||

│ ├──utils

|

||||

│ ├──byte_pair_encoding.py // Apply BPE with subword-nmt.

|

||||

│ ├──dictionary.py // Dictionary.

|

||||

│ ├──loss_moniter.py // Callback of monitering loss during training step.

|

||||

│ ├──lr_scheduler.py // Learning rate scheduler.

|

||||

│ ├──ppl_score.py // Perplexity score based on N-gram.

|

||||

│ ├──rouge_score.py // Calculate ROUGE score.

|

||||

│ ├──load_weights.py // Load weights from a checkpoint or NPZ file.

|

||||

│ ├──initializer.py // Parameters initializer.

|

||||

├── vocab

|

||||

│ ├──all.bpe.codes // BPE codes table(this file should be generated by user).

|

||||

│ ├──all_en.dict.bin // Learned vocabulary file(this file should be generated by user).

|

||||

├── scripts

|

||||

│ ├──run.sh // Train & evaluate model script.

|

||||

│ ├──learn_subword.sh // Learn BPE codes.

|

||||

│ ├──stop_training.sh // Stop training.

|

||||

├── requirements.txt // Requirements of third party package.

|

||||

├── train.py // Train API entry.

|

||||

├── eval.py // Infer API entry.

|

||||

├── tokenize_corpus.py // Corpus tokenization.

|

||||

├── apply_bpe_encoding.py // Applying bpe encoding.

|

||||

├── weights_average.py // Average multi model checkpoints to NPZ format.

|

||||

├── news_crawl.py // Create News Crawl dataset for pre-training.

|

||||

├── gigaword.py // Create Gigaword Corpus.

|

||||

├── cornell_dialog.py // Create Cornell Movie Dialog dataset for conversation response.

|

||||

|

||||

```

|

||||

|

||||

|

||||

## Data Preparation

|

||||

|

||||

The data preparation of a natural language processing task contains data cleaning, tokenization, encoding and vocabulary generation steps.

|

||||

|

||||

In our experiments, using [Byte Pair Encoding(BPE)](https://arxiv.org/abs/1508.07909) could reduce size of vocabulary, and relieve the OOV influence effectively.

|

||||

|

||||

Vocabulary could be created using `src/utils/dictionary.py` with text dictionary which is learnt from BPE.

|

||||

For more detail about BPE, please refer to [Subword-nmt lib](https://www.cnpython.com/pypi/subword-nmt) or [paper](https://arxiv.org/abs/1508.07909).

|

||||

|

||||

In our experiments, vocabulary was learned based on 1.9M sentences from News Crawl Dataset, size of vocabulary is 45755.

|

||||

|

||||

Here, we have a brief introduction of data preparation scripts.

|

||||

|

||||

|

||||

### Tokenization

|

||||

Using `tokenize_corpus.py` could tokenize corpus whose text files are in format of `.txt`.

|

||||

|

||||

Major parameters in `tokenize_corpus.py`:

|

||||

|

||||

```bash

|

||||

--corpus_folder: Corpus folder path, if multi-folders are provided, use ',' split folders.

|

||||

--output_folder: Output folder path.

|

||||

--tokenizer: Tokenizer to be used, nltk or jieba, if nltk is not installed fully, use jieba instead.

|

||||

--pool_size: Processes pool size.

|

||||

```

|

||||

|

||||

Sample code:

|

||||

```bash

|

||||

python tokenize_corpus.py --corpus_folder /{path}/corpus --output_folder /{path}/tokenized_corpus --tokenizer {nltk|jieba} --pool_size 16

|

||||

```

|

||||

|

||||

|

||||

### Byte Pair Encoding

|

||||

After tokenization, BPE is applied to tokenized corpus with provided `all.bpe.codes`.

|

||||

|

||||

Apply BPE script can be found in `apply_bpe_encoding.py`.

|

||||

|

||||

Major parameters in `apply_bpe_encoding.py`:

|

||||

|

||||

```bash

|

||||

--codes: BPE codes file.

|

||||

--src_folder: Corpus folders.

|

||||

--output_folder: Output files folder.

|

||||

--prefix: Prefix of text file in `src_folder`.

|

||||

--vocab_path: Generated vocabulary output path.

|

||||

--threshold: Filter out words that frequency is lower than threshold.

|

||||

--processes: Size of process pool (to accelerate). Default: 2.

|

||||

```

|

||||

|

||||

Sample code:

|

||||

```bash

|

||||

python tokenize_corpus.py --codes /{path}/all.bpe.codes \

|

||||

--src_folder /{path}/tokenized_corpus \

|

||||

--output_folder /{path}/tokenized_corpus/bpe \

|

||||

--prefix tokenized \

|

||||

--vocab_path /{path}/vocab_en.dict.bin

|

||||

--processes 32

|

||||

```

|

||||

|

||||

|

||||

### Build Vocabulary

|

||||

Support that you want to create a new vocabulary, there are two options:

|

||||

1. Learn BPE codes from scratch, and create vocabulary with multi vocabulary files from `subword-nmt`.

|

||||

2. Create from an existing vocabulary file which lines in the format of `word frequency`.

|

||||

3. *Optional*, Create a small vocabulary based on `vocab/all_en.dict.bin` with method of `shink` from `src/utils/dictionary.py`.

|

||||

4. Persistent vocabulary to `vocab` folder with method `persistence()`.

|

||||

|

||||

Major interface of `src/utils/dictionary.py` are as follow:

|

||||

|

||||

1. `shrink(self, threshold=50)`: Shrink the size of vocabulary by filter out words frequency is lower than threshold. It returns a new vocabulary.

|

||||

2. `load_from_text(cls, filepaths: List[str])`: Load existed text vocabulary which lines in the format of `word frequency`.

|

||||

3. `load_from_persisted_dict(cls, filepath)`: Load from a persisted binary vocabulary which was saved by calling `persistence()` method.

|

||||

4. `persistence(self, path)`: Save vocabulary object to binary file.

|

||||

|

||||

Sample code:

|

||||

```python

|

||||

from src.utils import Dictionary

|

||||

|

||||

vocabulary = Dictionary.load_from_persisted_dict("vocab/all_en.dict.bin")

|

||||

tokens = [1, 2, 3, 4, 5]

|

||||

# Convert ids to symbols.

|

||||

print([vocabulary[t] for t in tokens])

|

||||

|

||||

sentence = ["Hello", "world"]

|

||||

# Convert symbols to ids.

|

||||

print([vocabulary.index[s] for s in sentence])

|

||||

```

|

||||

|

||||

For more detail, please refer to the source file.

|

||||

|

||||

|

||||

### Generate Dataset

|

||||

As mentioned above, three corpus are used in MASS mode, dataset generation scripts for them are provided.

|

||||

|

||||

#### News Crawl Corpus

|

||||

Script can be found in `news_crawl.py`.

|

||||

|

||||

Major parameters in `news_crawl.py`:

|

||||

|

||||

```bash

|

||||

Note that please provide `--existed_vocab` or `--dict_folder` at least one.

|

||||

A new vocabulary would be created in `output_folder` when pass `--dict_folder`.

|

||||

|

||||

--src_folder: Corpus folders.

|

||||

--existed_vocab: Optional, persisted vocabulary file.

|

||||

--mask_ratio: Ratio of mask.

|

||||

--output_folder: Output dataset files folder path.

|

||||

--max_len: Maximum sentence length. If a sentence longer than `max_len`, then drop it.

|

||||

--suffix: Optional, suffix of generated dataset files.

|

||||

--processes: Optional, size of process pool (to accelerate). Default: 2.

|

||||

```

|

||||

|

||||

Sample code:

|

||||

|

||||

```bash

|

||||

python news_crawl.py --src_folder /{path}/news_crawl \

|

||||

--existed_vocab /{path}/mass/vocab/all_en.dict.bin \

|

||||

--mask_ratio 0.5 \

|

||||

--output_folder /{path}/news_crawl_dataset \

|

||||

--max_len 32 \

|

||||

--processes 32

|

||||

```

|

||||

|

||||

|

||||

#### Gigaword Corpus

|

||||

Script can be found in `gigaword.py`.

|

||||

|

||||

Major parameters in `gigaword.py`:

|

||||

|

||||

```bash

|

||||

--train_src: Train source file path.

|

||||

--train_ref: Train reference file path.

|

||||

--test_src: Test source file path.

|

||||

--test_ref: Test reference file path.

|

||||

--existed_vocab: Persisted vocabulary file.

|

||||

--output_folder: Output dataset files folder path.

|

||||

--noise_prob: Optional, add noise prob. Default: 0.

|

||||

--max_len: Optional, maximum sentence length. If a sentence longer than `max_len`, then drop it. Default: 64.

|

||||

--format: Optional, dataset format, "mindrecord" or "tfrecord". Default: "tfrecord".

|

||||

```

|

||||

|

||||

Sample code:

|

||||

|

||||

```bash

|

||||

python gigaword.py --train_src /{path}/gigaword/train_src.txt \

|

||||

--train_ref /{path}/gigaword/train_ref.txt \

|

||||

--test_src /{path}/gigaword/test_src.txt \

|

||||

--test_ref /{path}/gigaword/test_ref.txt \

|

||||

--existed_vocab /{path}/mass/vocab/all_en.dict.bin \

|

||||

--noise_prob 0.1 \

|

||||

--output_folder /{path}/gigaword_dataset \

|

||||

--max_len 64

|

||||

```

|

||||

|

||||

|

||||

#### Cornell Movie Dialog Corpus

|

||||

Script can be found in `cornell_dialog.py`.

|

||||

|

||||

Major parameters in `cornell_dialog.py`:

|

||||

|

||||

```bash

|

||||

--src_folder: Corpus folders.

|

||||

--existed_vocab: Persisted vocabulary file.

|

||||

--train_prefix: Train source and target file prefix. Default: train.

|

||||

--test_prefix: Test source and target file prefix. Default: test.

|

||||

--output_folder: Output dataset files folder path.

|

||||

--max_len: Maximum sentence length. If a sentence longer than `max_len`, then drop it.

|

||||

--valid_prefix: Optional, Valid source and target file prefix. Default: valid.

|

||||

```

|

||||

|

||||

Sample code:

|

||||

|

||||

```bash

|

||||

python cornell_dialog.py --src_folder /{path}/cornell_dialog \

|

||||

--existed_vocab /{path}/mass/vocab/all_en.dict.bin \

|

||||

--train_prefix train \

|

||||

--test_prefix test \

|

||||

--noise_prob 0.1 \

|

||||

--output_folder /{path}/cornell_dialog_dataset \

|

||||

--max_len 64

|

||||

```

|

||||

|

||||

|

||||

## Configuration

|

||||

Json file under the path `config/` is the template configuration file.

|

||||

Almost all of the options and arguments needed could be assigned conveniently, including the training platform, configurations of dataset and model, arguments of optimizer etc. Optional features such as loss scale and checkpoint are also available by setting the options correspondingly.

|

||||

For more detailed information about the attributes, refer to the file `config/config.py`.

|

||||

|

||||

## Training & Evaluation process

|

||||

For training a model, the shell script `run.sh` is all you need. In this scripts, the environment variable is set and the training script `train.py` under `mass` is executed.

|

||||

You may start a task training with single device or multiple devices by assigning the options and run the command in bash:

|

||||

```bash

|

||||

sh run.sh [--options]

|

||||

```

|

||||

|

||||

The usage is shown as bellow:

|

||||

```text

|

||||

Usage: run.sh [-h, --help] [-t, --task <CHAR>] [-n, --device_num <N>]

|

||||

[-i, --device_id <N>] [-j, --hccl_json <FILE>]

|

||||

[-c, --config <FILE>] [-o, --output <FILE>]

|

||||

[-v, --vocab <FILE>]

|

||||

|

||||

options:

|

||||

-h, --help show usage

|

||||

-t, --task select task: CHAR, 't' for train and 'i' for inference".

|

||||

-n, --device_num device number used for training: N, default is 1.

|

||||

-i, --device_id device id used for training with single device: N, 0<=N<=7, default is 0.

|

||||

-j, --hccl_json rank table file used for training with multiple devices: FILE.

|

||||

-c, --config configuration file as shown in the path 'mass/config': FILE.

|

||||

-o, --output assign output file of inference: FILE.

|

||||

-v, --vocab set the vocabulary"

|

||||

```

|

||||

Notes: Be sure to assign the hccl_json file while running a distributed-training.

|

||||

|

||||

The command followed shows a example for training with 2 devices.

|

||||

```bash

|

||||

sh run.sh --task t --device_num 2 --hccl_json /{path}/rank_table.json --config /{path}/config.json

|

||||

```

|

||||

ps. Discontinuous device id is not supported in `run.sh` at present, device id in `rank_table.json` must start from 0.

|

||||

|

||||

|

||||

If use a single chip, it would be like this:

|

||||

```bash

|

||||

sh run.sh --task t --device_num 1 --device_id 0 --config /{path}/config.json

|

||||

```

|

||||

|

||||

|

||||

## Weights average

|

||||

|

||||

```python

|

||||

python weights_average.py --input_files your_checkpoint_list --output_file model.npz

|

||||

```

|

||||

|

||||

The input_files is a list of you checkpoints file. To use model.npz as the weights, add its path in config.json at "existed_ckpt".

|

||||

```json

|

||||

{

|

||||

...

|

||||

"checkpoint_options": {

|

||||

"existed_ckpt": "/xxx/xxx/model.npz",

|

||||

"save_ckpt_steps": 1000,

|

||||

...

|

||||

},

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

## Learning rate scheduler

|

||||

|

||||

Two learning rate scheduler are provided in our model:

|

||||

|

||||

1. [Polynomial decay scheduler](https://towardsdatascience.com/learning-rate-schedules-and-adaptive-learning-rate-methods-for-deep-learning-2c8f433990d1).

|

||||

2. [Inverse square root scheduler](https://ece.uwaterloo.ca/~dwharder/aads/Algorithms/Inverse_square_root/).

|

||||

|

||||

LR scheduler could be config in `config/config.json`.

|

||||

|

||||

For Polynomial decay scheduler, config could be like:

|

||||

```json

|

||||

{

|

||||

...

|

||||

"learn_rate_config": {

|

||||

"optimizer": "adam",

|

||||

"lr": 1e-4,

|

||||

"lr_scheduler": "poly",

|

||||

"poly_lr_scheduler_power": 0.5,

|

||||

"decay_steps": 10000,

|

||||

"warmup_steps": 2000,

|

||||

"min_lr": 1e-6

|

||||

},

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

For Inverse square root scheduler, config could be like:

|

||||

```json

|

||||

{

|

||||

...

|

||||

"learn_rate_config": {

|

||||

"optimizer": "adam",

|

||||

"lr": 1e-4,

|

||||

"lr_scheduler": "isr",

|

||||

"decay_start_step": 12000,

|

||||

"warmup_steps": 2000,

|

||||

"min_lr": 1e-6

|

||||

},

|

||||

...

|

||||

}

|

||||

```

|

||||

|

||||

More detail about LR scheduler could be found in `src/utils/lr_scheduler.py`.

|

||||

|

||||

|

||||

# Model description

|

||||

|

||||

The MASS network is implemented by Transformer, which has multi-encoder layers and multi-decoder layers.

|

||||

For pre-training, we use the Adam optimizer and loss-scale to get the pre-trained model.

|

||||

During fine-turning, we fine-tune this pre-trained model with different dataset according to different tasks.

|

||||

During testing, we use the fine-turned model to predict the result, and adopt a beam search algorithm to

|

||||

get the most possible prediction results.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

## Performance

|

||||

|

||||

### Results

|

||||

|

||||

#### Fine-Tuning on Text Summarization

|

||||

The comparisons between MASS and two other pre-training methods in terms of ROUGE score on the text summarization task

|

||||

with 3.8M training data are as follows:

|

||||

|

||||

| Method | RG-1(F) | RG-2(F) | RG-L(F) |

|

||||

|:---------------|:--------------|:-------------|:-------------|

|

||||

| MASS | Ongoing | Ongoing | Ongoing |

|

||||

|

||||

#### Fine-Tuning on Conversational ResponseGeneration

|

||||

The comparisons between MASS and other baseline methods in terms of PPL on Cornell Movie Dialog corpus are as follows:

|

||||

|

||||

| Method | Data = 10K | Data = 110K |

|

||||

|--------------------|------------------|-----------------|

|

||||

| MASS | Ongoing | Ongoing |

|

||||

|

||||

#### Training Performance

|

||||

|

||||

| Parameters | Masked Sequence to Sequence Pre-training for Language Generation |

|

||||

|:---------------------------|:--------------------------------------------------------------------------|

|

||||

| Model Version | v1 |

|

||||

| Resource | Ascend 910, cpu 2.60GHz, 56cores;memory, 314G |

|

||||

| uploaded Date | 05/24/2020 |

|

||||

| MindSpore Version | 0.2.0 |

|

||||

| Dataset | News Crawl 2007-2017 English monolingual corpus, Gigaword corpus, Cornell Movie Dialog corpus |

|

||||

| Training Parameters | Epoch=50, steps=XXX, batch_size=192, lr=1e-4 |

|

||||

| Optimizer | Adam |

|

||||

| Loss Function | Label smoothed cross-entropy criterion |

|

||||

| outputs | Sentence and probability |

|

||||

| Loss | Lower than 2 |

|

||||

| Accuracy | For conversation response, ppl=23.52, for text summarization, RG-1=29.79. |

|

||||

| Speed | 611.45 sentences/s |

|

||||

| Total time | --/-- |

|

||||

| Params (M) | 44.6M |

|

||||

| Checkpoint for Fine tuning | ---Mb, --, [A link]() |

|

||||

| Model for inference | ---Mb, --, [A link]() |

|

||||

| Scripts | [A link]() |

|

||||

|

||||

|

||||

#### Inference Performance

|

||||

|

||||

| Parameters | Masked Sequence to Sequence Pre-training for Language Generation |

|

||||

|:---------------------------|:-----------------------------------------------------------|

|

||||

| Model Version | V1 |

|

||||

| Resource | Huawei 910 |

|

||||

| uploaded Date | 05/24/2020 |

|

||||

| MindSpore Version | 0.2.0 |

|

||||

| Dataset | Gigaword corpus, Cornell Movie Dialog corpus |

|

||||

| batch_size | --- |

|

||||

| outputs | Sentence and probability |

|

||||

| Accuracy | ppl=23.52 for conversation response, RG-1=29.79 for text summarization. |

|

||||

| Speed | ---- sentences/s |

|

||||

| Total time | --/-- |

|

||||

| Model for inference | ---Mb, --, [A link]() |

|

||||

|

||||

|

||||

# Environment Requirements

|

||||

|

||||

## Platform

|

||||

|

||||

- Hardware(Ascend)

|

||||

- Prepare hardware environment with Ascend processor. If you want to try Ascend, please send the [application form](https://obs-9be7.obs.cn-east-2.myhuaweicloud.com/file/other/Ascend%20Model%20Zoo%E4%BD%93%E9%AA%8C%E8%B5%84%E6%BA%90%E7%94%B3%E8%AF%B7%E8%A1%A8.docx) to ascend@huawei.com. Once approved, you could get the resources for trial.

|

||||

- Framework

|

||||

- [MindSpore](http://10.90.67.50/mindspore/archive/20200506/OpenSource/me_vm_x86/)

|

||||

- For more information, please check the resources below:

|

||||

- [MindSpore tutorials](https://www.mindspore.cn/tutorial/zh-CN/master/index.html)

|

||||

- [MindSpore API](https://www.mindspore.cn/api/zh-CN/master/index.html)

|

||||

|

||||

## Requirements

|

||||

|

||||

```txt

|

||||

nltk

|

||||

numpy

|

||||

subword-nmt

|

||||

rouge

|

||||

```

|

||||

|

||||

https://www.mindspore.cn/tutorial/zh-CN/master/advanced_use/network_migration.html

|

||||

|

||||

|

||||

# Get started

|

||||

MASS pre-trains a sequence to sequence model by predicting the masked fragments in an input sequence. After this, downstream tasks including text summarization and conversation response are candidated for fine-tuning the model and for inference.

|

||||

Here we provide a practice example to demonstrate the basic usage of MASS for pre-training, fine-tuning a model, and the inference process. The overall process is as follows:

|

||||

1. Download and process the dataset.

|

||||

2. Modify the `config.json` to config the network.

|

||||

3. Run a task for pre-training and fine-tuning.

|

||||

4. Perform inference and validation.

|

||||

|

||||

## Pre-training

|

||||

For pre-training a model, config the options in `config.json` firstly:

|

||||

- Assign the `pre_train_dataset` under `dataset_config` node to the dataset path.

|

||||

- Choose the optimizer('momentum/adam/lamb' is available).

|

||||

- Assign the 'ckpt_prefix' and 'ckpt_path' under `checkpoint_path` to save the model files.

|

||||

- Set other arguments including dataset configurations and network configurations.

|

||||

- If you have a trained model already, assign the `existed_ckpt` to the checkpoint file.

|

||||

|

||||

Run the shell script `run.sh` as followed:

|

||||

|

||||

```bash

|

||||

sh run.sh -t t -n 1 -i 1 -c /mass/config/config.json

|

||||

```

|

||||

Get the log and output files under the path `./run_mass_*/`, and the model file under the path assigned in the `config/config.json` file.

|

||||

|

||||

## Fine-tuning

|

||||

For fine-tuning a model, config the options in `config.json` firstly:

|

||||

- Assign the `fine_tune_dataset` under `dataset_config` node to the dataset path.

|

||||

- Assign the `existed_ckpt` under `checkpoint_path` node to the existed model file generated by pre-training.

|

||||

- Choose the optimizer('momentum/adam/lamb' is available).

|

||||

- Assign the `ckpt_prefix` and `ckpt_path` under `checkpoint_path` node to save the model files.

|

||||

- Set other arguments including dataset configurations and network configurations.

|

||||

|

||||

Run the shell script `run.sh` as followed:

|

||||

```bash

|

||||

sh run.sh -t t -n 1 -i 1 -c config/config.json

|

||||

```

|

||||

Get the log and output files under the path `./run_mass_*/`, and the model file under the path assigned in the `config/config.json` file.

|

||||

|

||||

## Inference

|

||||

If you need to use the trained model to perform inference on multiple hardware platforms, such as GPU, Ascend 910 or Ascend 310, you can refer to this [Link](https://www.mindspore.cn/tutorial/zh-CN/master/advanced_use/network_migration.html).

|

||||

For inference, config the options in `config.json` firstly:

|

||||

- Assign the `test_dataset` under `dataset_config` node to the dataset path.

|

||||

- Assign the `existed_ckpt` under `checkpoint_path` node to the model file produced by fine-tuning.

|

||||

- Choose the optimizer('momentum/adam/lamb' is available).

|

||||

- Assign the `ckpt_prefix` and `ckpt_path` under `checkpoint_path` node to save the model files.

|

||||

- Set other arguments including dataset configurations and network configurations.

|

||||

|

||||

Run the shell script `run.sh` as followed:

|

||||

|

||||

```bash

|

||||

sh run.sh -t i -n 1 -i 1 -c config/config.json -o {outputfile}

|

||||

```

|

||||

|

||||

# Description of random situation

|

||||

|

||||

MASS model contains dropout operations, if you want to disable dropout, please set related dropout_rate to 0 in `config/config.json`.

|

||||

|

||||

|

||||

# others

|

||||

The model has been validated on Ascend environment, not validated on CPU and GPU.

|

||||

|

||||

|

||||

# ModelZoo Homepage

|

||||

[Link](https://gitee.com/mindspore/mindspore/tree/master/mindspore/model_zoo)

|

||||

|

|

@ -0,0 +1,84 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""Apply bpe script."""

|

||||

import os

|

||||

import argparse

|

||||

from multiprocessing import Pool, cpu_count

|

||||

|

||||

from src.utils import Dictionary

|

||||

from src.utils import bpe_encode

|

||||

|

||||

parser = argparse.ArgumentParser(description='Apply BPE.')

|

||||

parser.add_argument("--codes", type=str, default="", required=True,

|

||||

help="bpe codes path.")

|

||||

parser.add_argument("--src_folder", type=str, default="", required=True,

|

||||

help="raw corpus folder.")

|

||||

parser.add_argument("--output_folder", type=str, default="", required=True,

|

||||

help="encoded corpus output path.")

|

||||

parser.add_argument("--prefix", type=str, default="", required=False,

|

||||

help="Prefix of text file.")

|

||||

parser.add_argument("--vocab_path", type=str, default="", required=True,

|

||||

help="Generated vocabulary output path.")

|

||||

parser.add_argument("--threshold", type=int, default=None, required=False,

|

||||

help="Filter out words that frequency is lower than threshold.")

|

||||

parser.add_argument("--processes", type=int, default=2, required=False,

|

||||

help="Number of processes to use.")

|

||||

|

||||

if __name__ == '__main__':

|

||||

args, _ = parser.parse_known_args()

|

||||

|

||||

if not (args.codes and args.src_folder and args.output_folder):

|

||||

raise ValueError("Please enter required params.")

|

||||

|

||||

source_folder = args.src_folder

|

||||

output_folder = args.output_folder

|

||||

codes = args.codes

|

||||

|

||||

if not os.path.exists(codes):

|

||||

raise FileNotFoundError("`--codes` is not existed.")

|

||||

if not os.path.exists(source_folder) or not os.path.isdir(source_folder):

|

||||

raise ValueError("`--src_folder` must be a dir and existed.")

|

||||

if not os.path.exists(output_folder) or not os.path.isdir(output_folder):

|

||||

raise ValueError("`--output_folder` must be a dir and existed.")

|

||||

if not isinstance(args.prefix, str) or len(args.prefix) > 128:

|

||||

raise ValueError("`--prefix` must be a str and len <= 128.")

|

||||

if not isinstance(args.processes, int):

|

||||

raise TypeError("`--processes` must be an integer.")

|

||||

|

||||

available_dict = []

|

||||

args_groups = []

|

||||

for file in os.listdir(source_folder):

|

||||

if args.prefix and not file.startswith(args.prefix):

|

||||

continue

|

||||

if file.endswith(".txt"):

|

||||

output_path = os.path.join(output_folder, file.replace(".txt", "_bpe.txt"))

|

||||

dict_path = os.path.join(output_folder, file.replace(".txt", ".dict"))

|

||||

available_dict.append(dict_path)

|

||||

args_groups.append((codes, os.path.join(source_folder, file),

|

||||

output_path, dict_path))

|

||||

|

||||

kernel_size = 1 if args.processes <= 0 else args.processes

|

||||

kernel_size = min(kernel_size, cpu_count())

|

||||

pool = Pool(kernel_size)

|

||||

for arg in args_groups:

|

||||

pool.apply_async(bpe_encode, args=arg)

|

||||

pool.close()

|

||||

pool.join()

|

||||

|

||||

vocab = Dictionary.load_from_text(available_dict)

|

||||

if args.threshold is not None:

|

||||

vocab = vocab.shrink(args.threshold)

|

||||

vocab.persistence(args.vocab_path)

|

||||

print(f" | Vocabulary Size: {len(vocab)}")

|

||||

|

|

@ -0,0 +1,20 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""MASS model configuration."""

|

||||

from .config import TransformerConfig

|

||||

|

||||

__all__ = [

|

||||

"TransformerConfig"

|

||||

]

|

||||

|

|

@ -0,0 +1,54 @@

|

|||

{

|

||||

"dataset_config": {

|

||||

"epochs": 20,

|

||||

"batch_size": 192,

|

||||

"pre_train_dataset": "",

|

||||

"fine_tune_dataset": "",

|

||||

"test_dataset": "",

|

||||

"valid_dataset": "",

|

||||

"dataset_sink_mode": false,

|

||||

"dataset_sink_step": 100

|

||||

},

|

||||

"model_config": {

|

||||

"random_seed": 100,

|

||||

"save_graphs": false,

|

||||

"seq_length": 64,

|

||||

"vocab_size": 45744,

|

||||

"hidden_size": 1024,

|

||||

"num_hidden_layers": 6,

|

||||

"num_attention_heads": 8,

|

||||

"intermediate_size": 4096,

|

||||

"hidden_act": "relu",

|

||||

"hidden_dropout_prob": 0.2,

|

||||

"attention_dropout_prob": 0.2,

|

||||

"max_position_embeddings": 64,

|

||||

"initializer_range": 0.02,

|

||||

"label_smoothing": 0.1,

|

||||

"beam_width": 4,

|

||||

"length_penalty_weight": 1.0,

|

||||

"max_decode_length": 64,

|

||||

"input_mask_from_dataset": true

|

||||

},

|

||||

"loss_scale_config": {

|

||||

"init_loss_scale": 65536,

|

||||

"loss_scale_factor": 2,

|

||||

"scale_window": 200

|

||||

},

|

||||

"learn_rate_config": {

|

||||

"optimizer": "adam",

|

||||

"lr": 1e-4,

|

||||

"lr_scheduler": "poly",

|

||||

"poly_lr_scheduler_power": 0.5,

|

||||

"decay_steps": 10000,

|

||||

"decay_start_step": 12000,

|

||||

"warmup_steps": 4000,

|

||||

"min_lr": 1e-6

|

||||

},

|

||||

"checkpoint_options": {

|

||||

"existed_ckpt": "",

|

||||

"save_ckpt_steps": 2500,

|

||||

"keep_ckpt_max": 50,

|

||||

"ckpt_prefix": "ckpt",

|

||||

"ckpt_path": "checkpoints"

|

||||

}

|

||||

}

|

||||

|

|

@ -0,0 +1,232 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""Configuration class for Transformer."""

|

||||

import os

|

||||

import json

|

||||

import copy

|

||||

from typing import List

|

||||

|

||||

import mindspore.common.dtype as mstype

|

||||

|

||||

|

||||

def _is_dataset_file(file: str):

|

||||

return "tfrecord" in file.lower() or "mindrecord" in file.lower()

|

||||

|

||||

|

||||

def _get_files_from_dir(folder: str):

|

||||

_files = []

|

||||

for file in os.listdir(folder):

|

||||

if _is_dataset_file(file):

|

||||

_files.append(os.path.join(folder, file))

|

||||

return _files

|

||||

|

||||

|

||||

def get_source_list(folder: str) -> List:

|

||||

"""

|

||||

Get file list from a folder.

|

||||

|

||||

Returns:

|

||||

list, file list.

|

||||

"""

|

||||

_list = []

|

||||

if not folder:

|

||||

return _list

|

||||

|

||||

if os.path.isdir(folder):

|

||||

_list = _get_files_from_dir(folder)

|

||||

else:

|

||||

if _is_dataset_file(folder):

|

||||

_list.append(folder)

|

||||

return _list

|

||||

|

||||

|

||||

PARAM_NODES = {"dataset_config",

|

||||

"model_config",

|

||||

"loss_scale_config",

|

||||

"learn_rate_config",

|

||||

"checkpoint_options"}

|

||||

|

||||

|

||||

class TransformerConfig:

|

||||

"""

|

||||

Configuration for `Transformer`.

|

||||

|

||||

Args:

|

||||

random_seed (int): Random seed.

|

||||

batch_size (int): Batch size of input dataset.

|

||||

epochs (int): Epoch number.

|

||||

dataset_sink_mode (bool): Whether enable dataset sink mode.

|

||||

dataset_sink_step (int): Dataset sink step.

|

||||

lr_scheduler (str): Whether use lr_scheduler, only support "ISR" now.

|

||||

lr (float): Initial learning rate.

|

||||

min_lr (float): Minimum learning rate.

|

||||

decay_start_step (int): Step to decay.

|

||||

warmup_steps (int): Warm up steps.

|

||||

dataset_schema (str): Path of dataset schema file.

|

||||

pre_train_dataset (str): Path of pre-training dataset file or folder.

|

||||

fine_tune_dataset (str): Path of fine-tune dataset file or folder.

|

||||

test_dataset (str): Path of test dataset file or folder.

|

||||

valid_dataset (str): Path of validation dataset file or folder.

|

||||

ckpt_path (str): Checkpoints save path.

|

||||

save_ckpt_steps (int): Interval of saving ckpt.

|

||||

ckpt_prefix (str): Prefix of ckpt file.

|

||||

keep_ckpt_max (int): Max ckpt files number.

|

||||

seq_length (int): Length of input sequence. Default: 64.

|

||||

vocab_size (int): The shape of each embedding vector. Default: 46192.

|

||||

hidden_size (int): Size of embedding, attention, dim. Default: 512.

|

||||

num_hidden_layers (int): Encoder, Decoder layers.

|

||||

num_attention_heads (int): Number of hidden layers in the Transformer encoder/decoder

|

||||

cell. Default: 6.

|

||||

intermediate_size (int): Size of intermediate layer in the Transformer

|

||||

encoder/decoder cell. Default: 4096.

|

||||

hidden_act (str): Activation function used in the Transformer encoder/decoder

|

||||

cell. Default: "relu".

|

||||

init_loss_scale (int): Initialized loss scale.

|

||||

loss_scale_factor (int): Loss scale factor.

|

||||

scale_window (int): Window size of loss scale.

|

||||

beam_width (int): Beam width for beam search in inferring. Default: 4.

|

||||

length_penalty_weight (float): Penalty for sentence length. Default: 1.0.

|

||||

label_smoothing (float): Label smoothing setting. Default: 0.1.

|

||||

input_mask_from_dataset (bool): Specifies whether to use the input mask that loaded from

|

||||

dataset. Default: True.

|

||||

save_graphs (bool): Whether to save graphs, please set to True if mindinsight

|

||||

is wanted.

|

||||

dtype (mstype): Data type of the input. Default: mstype.float32.

|

||||

max_decode_length (int): Max decode length for inferring. Default: 64.

|

||||

hidden_dropout_prob (float): The dropout probability for hidden outputs. Default: 0.1.

|

||||

attention_dropout_prob (float): The dropout probability for

|

||||

Multi-head Self-Attention. Default: 0.1.

|

||||

max_position_embeddings (int): Maximum length of sequences used in this

|

||||

model. Default: 512.

|

||||

initializer_range (float): Initialization value of TruncatedNormal. Default: 0.02.

|

||||

"""

|

||||

|

||||

def __init__(self,

|

||||

random_seed=74,

|

||||

batch_size=64, epochs=1,

|

||||

dataset_sink_mode=True, dataset_sink_step=1,

|

||||

lr_scheduler="", optimizer="adam",

|

||||

lr=1e-4, min_lr=1e-6,

|

||||

decay_steps=10000, poly_lr_scheduler_power=1,

|

||||

decay_start_step=-1, warmup_steps=2000,

|

||||

pre_train_dataset: str = None,

|

||||

fine_tune_dataset: str = None,

|

||||

test_dataset: str = None,

|

||||

valid_dataset: str = None,

|

||||

ckpt_path: str = None,

|

||||

save_ckpt_steps=2000,

|

||||

ckpt_prefix="CKPT",

|

||||

existed_ckpt="",

|

||||

keep_ckpt_max=20,

|

||||

seq_length=128,

|

||||

vocab_size=46192,

|

||||

hidden_size=512,

|

||||

num_hidden_layers=6,

|

||||

num_attention_heads=8,

|

||||

intermediate_size=4096,

|

||||

hidden_act="relu",

|

||||

hidden_dropout_prob=0.1,

|

||||

attention_dropout_prob=0.1,

|

||||

max_position_embeddings=64,

|

||||

initializer_range=0.02,

|

||||

init_loss_scale=2 ** 10,

|

||||

loss_scale_factor=2, scale_window=2000,

|

||||

beam_width=5,

|

||||

length_penalty_weight=1.0,

|

||||

label_smoothing=0.1,

|

||||

input_mask_from_dataset=True,

|

||||

save_graphs=False,

|

||||

dtype=mstype.float32,

|

||||

max_decode_length=64):

|

||||

|

||||

self.save_graphs = save_graphs

|

||||

self.random_seed = random_seed

|

||||

self.pre_train_dataset = get_source_list(pre_train_dataset) # type: List[str]

|

||||

self.fine_tune_dataset = get_source_list(fine_tune_dataset) # type: List[str]

|

||||

self.valid_dataset = get_source_list(valid_dataset) # type: List[str]

|

||||

self.test_dataset = get_source_list(test_dataset) # type: List[str]

|

||||

|

||||

if not isinstance(epochs, int) and epochs < 0:

|

||||

raise ValueError("`epoch` must be type of int.")

|

||||

|

||||

self.epochs = epochs

|

||||

self.dataset_sink_mode = dataset_sink_mode

|

||||

self.dataset_sink_step = dataset_sink_step

|

||||

|

||||

self.ckpt_path = ckpt_path

|

||||

self.keep_ckpt_max = keep_ckpt_max

|

||||

self.save_ckpt_steps = save_ckpt_steps

|

||||

self.ckpt_prefix = ckpt_prefix

|

||||

self.existed_ckpt = existed_ckpt

|

||||

|

||||

self.batch_size = batch_size

|

||||

self.seq_length = seq_length

|

||||

self.vocab_size = vocab_size

|

||||

self.hidden_size = hidden_size

|

||||

self.num_hidden_layers = num_hidden_layers

|

||||

self.num_attention_heads = num_attention_heads

|

||||

self.hidden_act = hidden_act

|

||||

self.intermediate_size = intermediate_size

|

||||

self.hidden_dropout_prob = hidden_dropout_prob

|

||||

self.attention_dropout_prob = attention_dropout_prob

|

||||

self.max_position_embeddings = max_position_embeddings

|

||||

self.initializer_range = initializer_range

|

||||

self.label_smoothing = label_smoothing

|

||||

|

||||

self.beam_width = beam_width

|

||||

self.length_penalty_weight = length_penalty_weight

|

||||

self.max_decode_length = max_decode_length

|

||||

self.input_mask_from_dataset = input_mask_from_dataset

|

||||

self.compute_type = mstype.float16

|

||||

self.dtype = dtype

|

||||

|

||||

self.scale_window = scale_window

|

||||

self.loss_scale_factor = loss_scale_factor

|

||||

self.init_loss_scale = init_loss_scale

|

||||

|

||||

self.optimizer = optimizer

|

||||

self.lr = lr

|

||||

self.lr_scheduler = lr_scheduler

|

||||

self.min_lr = min_lr

|

||||

self.poly_lr_scheduler_power = poly_lr_scheduler_power

|

||||

self.decay_steps = decay_steps

|

||||

self.decay_start_step = decay_start_step

|

||||

self.warmup_steps = warmup_steps

|

||||

|

||||

self.train_url = ""

|

||||

|

||||

@classmethod

|

||||

def from_dict(cls, json_object: dict):

|

||||

"""Constructs a `TransformerConfig` from a Python dictionary of parameters."""

|

||||

_params = {}

|

||||

for node in PARAM_NODES:

|

||||

for key in json_object[node]:

|

||||

_params[key] = json_object[node][key]

|

||||

return cls(**_params)

|

||||

|

||||

@classmethod

|

||||

def from_json_file(cls, json_file):

|

||||

"""Constructs a `TransformerConfig` from a json file of parameters."""

|

||||

with open(json_file, "r") as reader:

|

||||

return cls.from_dict(json.load(reader))

|

||||

|

||||

def to_dict(self):

|

||||

"""Serializes this instance to a Python dictionary."""

|

||||

output = copy.deepcopy(self.__dict__)

|

||||

return output

|

||||

|

||||

def to_json_string(self):

|

||||

"""Serializes this instance to a JSON string."""

|

||||

return json.dumps(self.to_dict(), indent=2, sort_keys=True) + "\n"

|

||||

|

|

@ -0,0 +1,110 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""Generate Cornell Movie Dialog dataset."""

|

||||

import os

|

||||

import argparse

|

||||

from src.dataset import BiLingualDataLoader

|

||||

from src.language_model import NoiseChannelLanguageModel

|

||||

from src.utils import Dictionary

|

||||

|

||||

parser = argparse.ArgumentParser(description='Generate Cornell Movie Dialog dataset file.')

|

||||

parser.add_argument("--src_folder", type=str, default="", required=True,

|

||||

help="Raw corpus folder.")

|

||||

parser.add_argument("--existed_vocab", type=str, default="", required=True,

|

||||

help="Existed vocabulary.")

|

||||

parser.add_argument("--train_prefix", type=str, default="train", required=False,

|

||||

help="Prefix of train file.")

|

||||

parser.add_argument("--test_prefix", type=str, default="test", required=False,

|

||||

help="Prefix of test file.")

|

||||

parser.add_argument("--valid_prefix", type=str, default=None, required=False,

|

||||

help="Prefix of valid file.")

|

||||

parser.add_argument("--noise_prob", type=float, default=0., required=False,

|

||||

help="Add noise prob.")

|

||||

parser.add_argument("--max_len", type=int, default=32, required=False,

|

||||

help="Max length of sentence.")

|

||||

parser.add_argument("--output_folder", type=str, default="", required=True,

|

||||

help="Dataset output path.")

|

||||

|

||||

if __name__ == '__main__':

|

||||

args, _ = parser.parse_known_args()

|

||||

|

||||

dicts = []

|

||||

train_src_file = ""

|

||||

train_tgt_file = ""

|

||||

test_src_file = ""

|

||||

test_tgt_file = ""

|

||||

valid_src_file = ""

|

||||

valid_tgt_file = ""

|

||||

for file in os.listdir(args.src_folder):

|

||||

if file.startswith(args.train_prefix) and "src" in file and file.endswith(".txt"):

|

||||

train_src_file = os.path.join(args.src_folder, file)

|

||||

elif file.startswith(args.train_prefix) and "tgt" in file and file.endswith(".txt"):

|

||||

train_tgt_file = os.path.join(args.src_folder, file)

|

||||

elif file.startswith(args.test_prefix) and "src" in file and file.endswith(".txt"):

|

||||

test_src_file = os.path.join(args.src_folder, file)

|

||||

elif file.startswith(args.test_prefix) and "tgt" in file and file.endswith(".txt"):

|

||||

test_tgt_file = os.path.join(args.src_folder, file)

|

||||

elif args.valid_prefix and file.startswith(args.valid_prefix) and "src" in file and file.endswith(".txt"):

|

||||

valid_src_file = os.path.join(args.src_folder, file)

|

||||

elif args.valid_prefix and file.startswith(args.valid_prefix) and "tgt" in file and file.endswith(".txt"):

|

||||

valid_tgt_file = os.path.join(args.src_folder, file)

|

||||

else:

|

||||

continue

|

||||

|

||||

vocab = Dictionary.load_from_persisted_dict(args.existed_vocab)

|

||||

|

||||

if train_src_file and train_tgt_file:

|

||||

BiLingualDataLoader(

|

||||

src_filepath=train_src_file,

|

||||

tgt_filepath=train_tgt_file,

|

||||

src_dict=vocab, tgt_dict=vocab,

|

||||

src_lang="en", tgt_lang="en",

|

||||

language_model=NoiseChannelLanguageModel(add_noise_prob=args.noise_prob),

|

||||

max_sen_len=args.max_len

|

||||

).write_to_tfrecord(

|

||||

path=os.path.join(

|

||||

args.output_folder, "train_cornell_dialog.tfrecord"

|

||||

)

|

||||

)

|

||||

|

||||

if test_src_file and test_tgt_file:

|

||||

BiLingualDataLoader(

|

||||

src_filepath=test_src_file,

|

||||

tgt_filepath=test_tgt_file,

|

||||

src_dict=vocab, tgt_dict=vocab,

|

||||

src_lang="en", tgt_lang="en",

|

||||

language_model=NoiseChannelLanguageModel(add_noise_prob=0.),

|

||||

max_sen_len=args.max_len

|

||||

).write_to_tfrecord(

|

||||

path=os.path.join(

|

||||

args.output_folder, "test_cornell_dialog.tfrecord"

|

||||

)

|

||||

)

|

||||

|

||||

if args.valid_prefix:

|

||||

BiLingualDataLoader(

|

||||

src_filepath=os.path.join(args.src_folder, valid_src_file),

|

||||

tgt_filepath=os.path.join(args.src_folder, valid_tgt_file),

|

||||

src_dict=vocab, tgt_dict=vocab,

|

||||

src_lang="en", tgt_lang="en",

|

||||

language_model=NoiseChannelLanguageModel(add_noise_prob=0.),

|

||||

max_sen_len=args.max_len

|

||||

).write_to_tfrecord(

|

||||

path=os.path.join(

|

||||

args.output_folder, "valid_cornell_dialog.tfrecord"

|

||||

)

|

||||

)

|

||||

|

||||

print(f" | Vocabulary size: {vocab.size}.")

|

||||

|

|

@ -0,0 +1,75 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""Evaluation api."""

|

||||

import argparse

|

||||

import pickle

|

||||

import numpy as np

|

||||

|

||||

from mindspore.common import dtype as mstype

|

||||

|

||||

from config import TransformerConfig

|

||||

from src.transformer import infer

|

||||

from src.utils import ngram_ppl

|

||||

from src.utils import Dictionary

|

||||

from src.utils import rouge

|

||||

|

||||

parser = argparse.ArgumentParser(description='Evaluation MASS.')

|

||||

parser.add_argument("--config", type=str, required=True,

|

||||

help="Model config json file path.")

|

||||

parser.add_argument("--vocab", type=str, required=True,

|

||||

help="Vocabulary to use.")

|

||||

parser.add_argument("--output", type=str, required=True,

|

||||

help="Result file path.")

|

||||

|

||||

|

||||

def get_config(config):

|

||||

config = TransformerConfig.from_json_file(config)

|

||||

config.compute_type = mstype.float16

|

||||

config.dtype = mstype.float32

|

||||

return config

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

args, _ = parser.parse_known_args()

|

||||

vocab = Dictionary.load_from_persisted_dict(args.vocab)

|

||||

_config = get_config(args.config)

|

||||

result = infer(_config)

|

||||

with open(args.output, "wb") as f:

|

||||

pickle.dump(result, f, 1)

|

||||

|

||||

ppl_score = 0.

|

||||

preds = []

|

||||

tgts = []

|

||||

_count = 0

|

||||

for sample in result:

|

||||

sentence_prob = np.array(sample['prediction_prob'], dtype=np.float32)

|

||||

sentence_prob = sentence_prob[:, 1:]

|

||||

_ppl = []

|

||||

for path in sentence_prob:

|

||||

_ppl.append(ngram_ppl(path, log_softmax=True))

|

||||

ppl = np.min(_ppl)

|

||||

preds.append(' '.join([vocab[t] for t in sample['prediction']]))

|

||||

tgts.append(' '.join([vocab[t] for t in sample['target']]))

|

||||

print(f" | source: {' '.join([vocab[t] for t in sample['source']])}")

|

||||

print(f" | target: {tgts[-1]}")

|

||||

print(f" | prediction: {preds[-1]}")

|

||||

print(f" | ppl: {ppl}.")

|

||||

if np.isinf(ppl):

|

||||

continue

|

||||

ppl_score += ppl

|

||||

_count += 1

|

||||

|

||||

print(f" | PPL={ppl_score / _count}.")

|

||||

rouge(preds, tgts)

|

||||

|

|

@ -0,0 +1,84 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

"""Generate Gigaword dataset."""

|

||||

import os

|

||||

import argparse

|

||||

|

||||

from src.dataset import BiLingualDataLoader

|

||||

from src.language_model import NoiseChannelLanguageModel

|

||||

from src.utils import Dictionary

|

||||

|

||||

parser = argparse.ArgumentParser(description='Create Gigaword fine-tune Dataset.')

|

||||

parser.add_argument("--train_src", type=str, default="", required=False,

|

||||

help="train dataset source file path.")

|

||||

parser.add_argument("--train_ref", type=str, default="", required=False,

|

||||

help="train dataset reference file path.")

|

||||

parser.add_argument("--test_src", type=str, default="", required=False,

|

||||

help="test dataset source file path.")

|

||||

parser.add_argument("--test_ref", type=str, default="", required=False,

|

||||

help="test dataset reference file path.")

|

||||

parser.add_argument("--noise_prob", type=float, default=0., required=False,

|

||||

help="add noise prob.")

|

||||

parser.add_argument("--existed_vocab", type=str, default="", required=False,

|

||||

help="existed vocab path.")

|

||||

parser.add_argument("--max_len", type=int, default=64, required=False,

|

||||

help="max length of sentences.")

|

||||

parser.add_argument("--output_folder", type=str, default="", required=True,

|

||||

help="dataset output path.")

|

||||

parser.add_argument("--format", type=str, default="tfrecord", required=False,

|

||||

help="dataset format.")

|

||||

|

||||

if __name__ == '__main__':

|

||||

args, _ = parser.parse_known_args()

|

||||

|

||||

vocab = Dictionary.load_from_persisted_dict(args.existed_vocab)

|

||||

|

||||

if args.train_src and args.train_ref:

|

||||

train = BiLingualDataLoader(

|

||||

src_filepath=args.train_src,

|

||||

tgt_filepath=args.train_ref,

|

||||

src_dict=vocab, tgt_dict=vocab,

|

||||

src_lang="en", tgt_lang="en",

|

||||

language_model=NoiseChannelLanguageModel(add_noise_prob=args.noise_prob),

|

||||

max_sen_len=args.max_len

|

||||

)

|

||||

if "tf" in args.format.lower():

|

||||

train.write_to_tfrecord(

|

||||

path=os.path.join(args.output_folder, "gigaword_train_dataset.tfrecord")

|

||||

)

|

||||

else:

|

||||

train.write_to_mindrecord(

|

||||

path=os.path.join(args.output_folder, "gigaword_train_dataset.mindrecord")

|

||||

)

|

||||

|

||||

if args.test_src and args.test_ref:

|

||||

test = BiLingualDataLoader(

|

||||

src_filepath=args.test_src,

|

||||

tgt_filepath=args.test_ref,

|

||||

src_dict=vocab, tgt_dict=vocab,

|

||||

src_lang="en", tgt_lang="en",

|

||||

language_model=NoiseChannelLanguageModel(add_noise_prob=0),

|

||||

max_sen_len=args.max_len

|

||||

)

|

||||

if "tf" in args.format.lower():

|

||||

test.write_to_tfrecord(

|

||||

path=os.path.join(args.output_folder, "gigaword_test_dataset.tfrecord")

|

||||

)

|

||||

else:

|

||||

test.write_to_mindrecord(

|

||||

path=os.path.join(args.output_folder, "gigaword_test_dataset.mindrecord")

|

||||

)

|

||||

|

||||

print(f" | Vocabulary size: {vocab.size}.")

|

||||

|

|

@ -0,0 +1,58 @@

|

|||

# Copyright 2020 Huawei Technologies Co., Ltd

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||